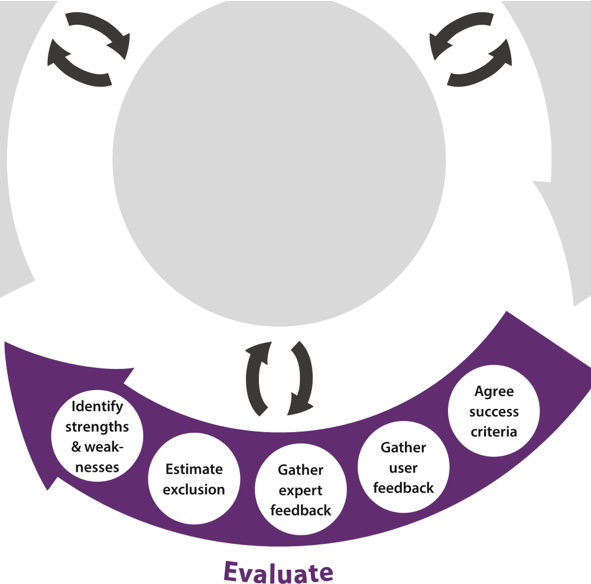

The Evaluate phase is about examining the concepts from the Create phase to determine how well they meet the needs identified in the Explore phase. The first activity in this phase is to agree the success criteria, which should be based on the wants & needs of users and other stakeholders that were identified within the Explore phase. These criteria will then be used to inform the plan for gathering feedback from users and experts and to summarise the results.

This page describes the activities within the Evaluate phase of the Inclusive Design Wheel, and explains how they apply to the inclusive design of transport services.

On this page

Evaluate activities answer the question ‘How well are the needs being met by the proposed solutions?’. View full map.

Agree success criteria

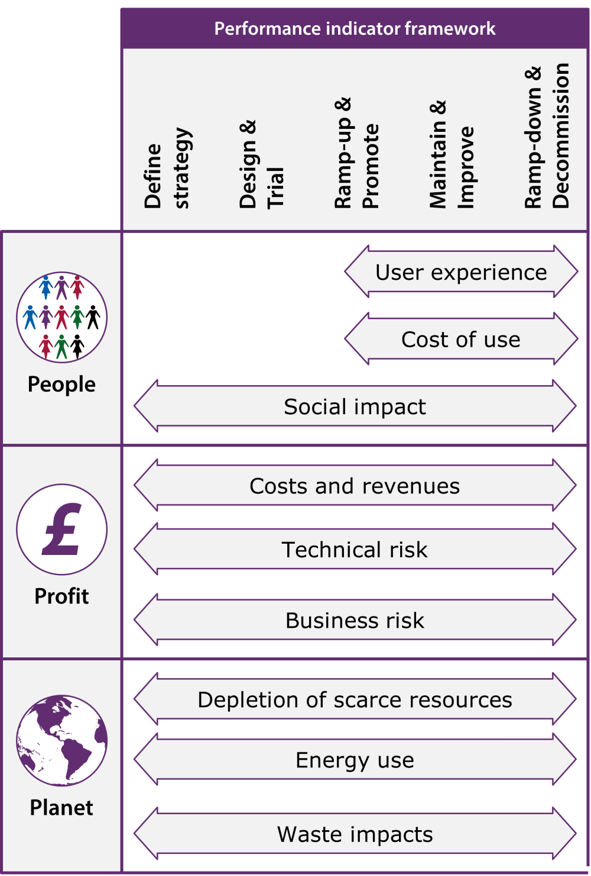

It is important to consider the question ‘what does success look like?’ for an inclusive transport service. It may be helpful to use the framework shown opposite to ensure that a wide range of success criteria are considered, covering:

- People criteria: user experience, cost of use and social impact.

- Profit criteria: costs and revenues, technical risk and commercial business risk.

- Planet criteria: depletion of scarce resources, energy use and waste impacts.

Furthermore, the success criteria should attempt to address the whole life-cycle of the proposition, including the following phases:

- Define strategy

- Design & Trial

- Ramp-up & Promote

- Maintain & Improve

- Ramp-down & Decommission

The success criteria should, where possible, be specific and measurable, and should represent the key wants & needs that were identified in the Explore phase as well as other important variables critical to the success of the project. For example, the number of wheelchair users could be one measure of wheelchair accessibility for a bus service. This would be an example of a user related criterion which could be measured throughout the life-cycle of a more accessible bus service.

Gather user feedback

User feedback can be obtained at different levels of formality and detail, using methods such as:

- Contextual enquiry

- Focus groups

- Interviews

- Questionnaires

- User trials

Within the Evaluate phase, these methods can be used to gain insights on the proposed design solutions, which may be embodied through storyboards or prototypes. Note that some of the same methods may also have been used in the Explore phase to help understand how users behave with existing solutions.

Initial user feedback may be obtained within a co-creation workshop, as part of the co-design process. See the page about co-design for more detail. Focus groups, interviews and questionnaires can also be used to obtain feedback at various stages in the project. Later in the project, once a working prototype is available, formal user trials should be planned to objectively compare the performance of the proposed solution with an existing benchmark.

Whenever users are involved, it is important to take a range of ethical considerations into account. These include issues of consent, privacy, intellectual property and data protection. It is also important to consider whether the stakeholders that are planning or conducting the activities have a vested interest in achieving a particular outcome, and whether this could skew the results.

The output from the Understanding user diversity activity (within the Explore phase) should be used to inform the sampling for the user evaluation, to ensure that a good spread of users are included. The design of user trials, in particular, should also be independently reviewed to identify and mitigate any potential bias.

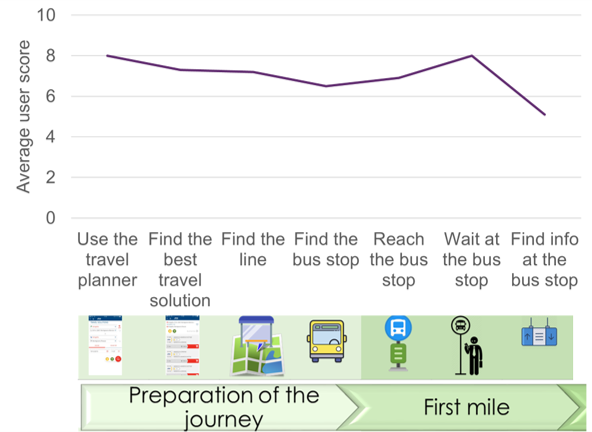

Example of user feedback from one of the DIGNITY pilot projects (Ancona). More examples like this are available within the Inclusive design log for transport.

Further information

- James Hom’s Usability Methods Toolbox website has a section on Usability testing which provides more information on objective user tests.

- The Usability Net website has an entry on Performance testing which also gives more information about usability testing.

Gather expert feedback

In this activity, a range of relevant experts use their knowledge and judgement to systematically evaluate the proposed concepts. They can provide both formative and summative feedback. Formative feedback identifies potential issues and gives recommendations for how the concept could be improved. Conversely, the purpose of summative evaluation is to objectively compare and rank different concepts.

The concepts may be evaluated from lots of different perspectives, such as user experience, accessibility, operational feasibility, and technical, financial or environmental risk. It’s important to consider whether the experts providing the feedback have a vested interest in achieving a particular outcome, and whether this could skew the objectivity of the feedback.

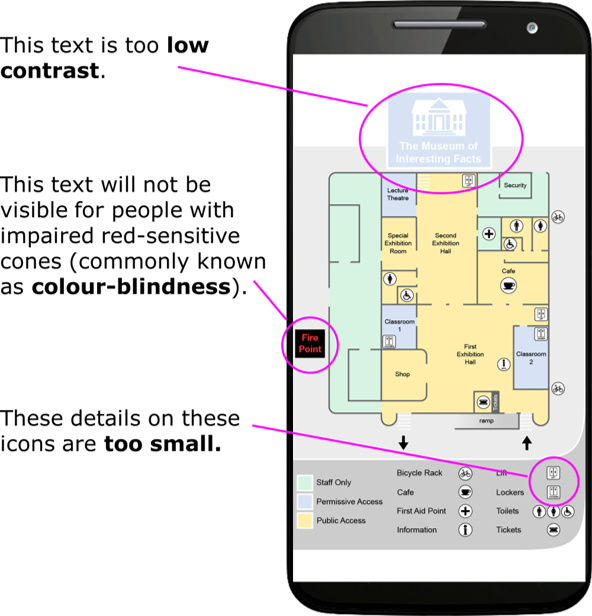

From an accessibility perspective, expert appraisals might include an access audit and/or a website accessibility audit. The capability loss simulation tools available on this website can assist with expert appraisals, helping experts to assess and communicate how capability loss affects interactions with products or services. These include the Cambridge simulation gloves and glasses. Furthermore, the Clari-Fi tool provides a way of evaluating the visual accessibility of text and icons within apps and websites that are displayed on mobile phones.

This made-up example was produced by the Engineering Design Centre, University of Cambridge, to show visual accessibility issues that commonly found during our consultancy services. This example is described in more detail within the Inclusive design log for transport.

Further information

- James Hom’s Usability Methods Toolbox website describes various ‘inspection’ methods that can be used by experts to help them inspect and evaluate a concept or product in a systematic way.

- The Usability Net website has a section on Heuristic evaluation. This is a particularly popular method of expert appraisal, in which the product is evaluated against established guidelines or principles.

Estimate exclusion

It can be helpful to identify how many people would be excluded from using a product or service on the basis of:

- their capabilities, such as vision, hearing, thinking, reach & dexterity, and mobility;

- the technology access requirements, such as needing to access a website or install an app;

- the technology competence required to successfully interact with the product or service;

- Other exclusionary factors, such as language, gender and age.

At the time of writing in November 2022, the DIGNITY datasets were the most comprehensive datasets available for covering all of these aspects. Of these datasets, the German dataset had the most robust sampling, and the biggest sample size (1010 participants). The questionnaire for the German survey, and SPSS and Excel files of the participant responses are available from the UPCommons repository.

The exclusion estimates shown opposite were derived from the DIGNITY German dataset, and avoid double counting of participants who are excluded on the basis of more than one factor. More detailed exclusion estimates can be provided by the authors of this toolkit, as part of our consultancy services.

The following are estimates of the proportion of the population who would be excluded from using a service with different technology access requirements. Please note that these are minimum figures and the exclusion for a particular service may be higher once the capability and technology competence requirements are also taken into account.

A service that requires users to:

- have a mobile phone: Exclusion is at least 2.1%

- access a website that has been designed to work on both mobile and desktop: Exclusion is at least 11.3%

- access a website while out and about: Exclusion is at least 18.3%

- access a website on a desktop or laptop computer (i.e., via a website that has been designed for desktop, but has poor experience on mobile): Exclusion is at least 17.5%

- install a smartphone app that requires an internet connection: Exclusion is at least 47.4%

- have previous experience with a mapping application: Exclusion is at least 58.7%

Exclusion values are expressed as weighted percentages of valid responses to the German DIGNITY survey. These exclusion estimates are described in more detail within the Inclusive design log for transport.

Further information

- Keates and Clarkson (2003)’s book ‘Countering design exclusion: An introduction to inclusive design’ provides a general introduction to exclusion calculations. (Published by Springer)

- A paper by Bradley et al (2021): ‘Predicting population exclusion for services dependent on mobile digital interfaces’ explains how exclusion figures for technology access are calculated. (Published in ‘Proceedings of Mobile HCI 2021’).

Identify strengths & weaknesses

This activity involves reviewing, summarising and presenting the findings from the evaluation activities. In doing so, it can be helpful to consider the strengths and weaknesses of the proposed solution in comparison to a defined benchmark. This is helpful because it is easier to judge whether something is ‘better’ or ‘worse’ than something else, rather than assessing whether something is ‘good’ or ‘bad’. The benchmark could be an existing transport service, website or app.

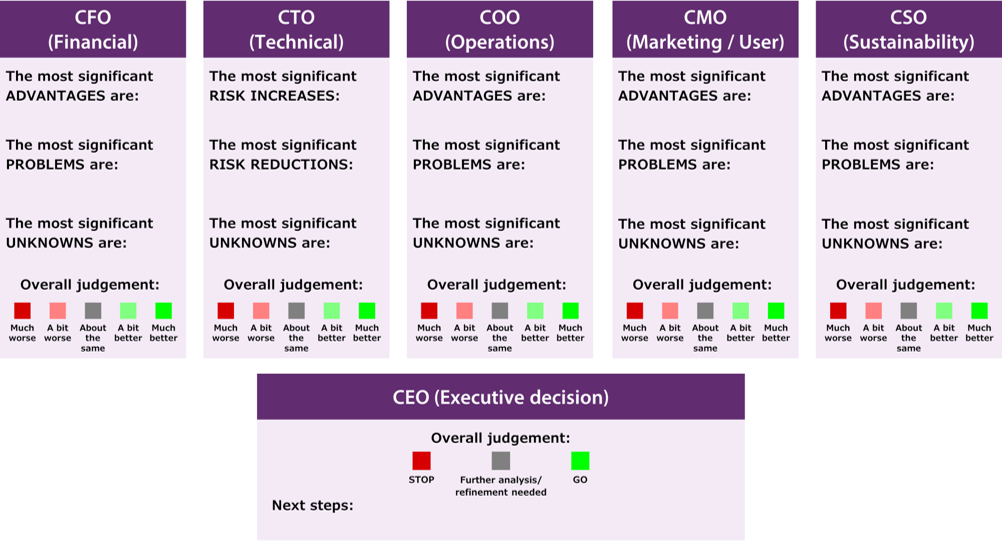

It can be useful to summarise the findings by considering the following ‘CXO’ perspectives (based on the performance indicator framework that was introduced in the Agree success criteria activity):

The relative importance of the different ‘CXO’ perspectives will depend on the objectives of the project. From the most important perspectives, the proposed solution ought to perform ‘a lot better’ than the chosen benchmark. From other perspectives, it may be acceptable for the performance of the proposed solution to be ‘about the same’ as the chosen benchmark. It is rarely acceptable for the proposed solution to perform worse than the benchmark, for any of the ‘CXO’ perspectives.

Summarising the strengths and weaknesses in this way may identify:

- uncertainties regarding the understanding of the users;

- opportunities for refining or improving the ideas;

- gaps in the evidence to support the solutions, from one or more different perspectives.

Each of these may be resolved by planning further activities within the Explore, Create and Evaluate phases.

Feedback

We would welcome your feedback on this page:

Privacy policy. If your feedback comments warrant follow-up communication, we will send you an email using the details you have provided. Feedback comments are anonymized and then stored on our file server. If you select the option to receive or contribute to the news bulletin, we will store your name and email address on our file server for the purposes of managing your subscription. You can unsubscribe and have your details deleted at any time, by using our Unsubscribe form. If you select the option to receive an activation code, we will store your name and email address on our fileserver indefinitely. This information will only be used to contact you for the specific purpose that you have indicated; it will not be shared. We use this personal information with your consent, which you can withdraw at any time.

Read more about how we use your personal data. Any e-mails that are sent or received are stored on our mail server for up to 24 months.